A Brief History of Data: From 1945 to the Digital Age

Data UniversityThrough the history, data has been at the centre of human progress. Since the first marks from the prehistory to its advanced use for AI purposes, data has consistently evolved alongside human societies.

As they grew more complex, written language, mathematical notation, and standardised measurement allowed information to be stored beyond human memory and shared across generations. In the modern era, computers and the internet transformed data usage in a way to make it a crucial asset for real-time decision-making and automation process.

In this article, we will retrace the incredible journey of data together and explore what it reveals about the future?

The rise of electronic systems from WWII to Apollo 11

Our story begins in 1945, at this time, the world just witnessed the most devastating conflict in the human history. The fight happened at all scales: land, sea and sky, pushing the nations to innovate to outdo the enemy. Information were a strategic asset and the need for faster calculations was crucial for the win.

One development was the creation of smaller electronic systems that could be integrated into military hardware. Computers used to be large and could only perform specific tasks, such as decoding messages. As computers shrank in size, they replaced mechanical parts and could perform all kinds of tasks, offering the benefit of smaller, faster machines.

Once the war ended, responsibility for data technology research shifted from military purposes to governments and universities. From that point onwards, this technology attracted increasing interest from a variety of fields, including business, science and engineering. The Dartmouth Workshop of 1956, where the term 'artificial intelligence' was first formally defined, is an example of this expanding attention.

In the decades following World War II, there was a succession of innovations, including the standardisation of data formats and the creation of the first programming languages for manipulating data. In particular, British computer scientist E. F. Codd developed relational databases to make it easier to organise and manage large amounts of data for analysis.

This made the Apollo Programme a milestone for data-driven systems. Over the missions, data progressively moved closer to the action, providing the astronauts with real-time assistance for operating complex manoeuvres and calculations. This ultimately made the Apollo 11 mission a giant leap for mankind and for data innovation.

The Rise of Personal Computing and the Early Democratisation of Data

The years following the Apollo Programme were a pivotal period in the history of personal computing. It was during this time that young engineers built the first personal computers in their garages, making them available for public use for the first time.

Data technology operated not only in the laboratories any more. Cost reductions and standardisation of components also made data technology easier for civilians to access and allowed them to manipulate data. Businesses progressively adopted personal computing for their employees to handle data, as this enhanced their daily, accountability-related tasks, for example.

In the same vein, data storage technologies evolved significantly. The introduction of external storage media, such as floppy disks, enabled users to save, transfer and share data more easily, further reinforcing the role of personal computing in both professional and personal contexts.

A key actor in this transformation was Microsoft, founded in 1975 by Bill Gates and Paul Allen. Unlike earlier hardware-focused innovators, Microsoft concentrated on software, recognising that operating systems and applications would become central to how users interacted with data.

With the introduction of graphical user interfaces through Microsoft Windows, interacting with data became more intuitive, shifting computing away from command-line expertise towards visual and user-friendly systems. This evolution reinforced the role of personal computers as everyday data tools, enabling widespread use of word processing, spreadsheets, and databases, and firmly anchoring data technology in modern professional and domestic life.

The Internet Revolution and the Emergence of Big Data and AI

In the 1990s, one technology that is widely used today changed everything: the Internet. Introduced by Tim Berners-Lee, it was a game changer for many businesses and individuals. From now on, data from documents could be linked together through hypertext.

Furthermore, people could communicate and collaborate on a large scale like never before. Data could be shared wirelessly, making it easier for employees within businesses to share information. From a user perspective, the internet paved the way for social networks, facilitating personal connections. For example, Facebook launched in 2004, has alone 3 billions active users according to their numbers in 2024.

Also, the Internet technology was integrated into daily objects to enhance assistance to daily tasks performed by individuals, like alarm clocks, house sensors that created new sources of data.

The rise of the Internet and social networks marked the beginning of the Big Data era, characterised by the unprecedented scale and diversity of digital information. Modern data tools enable the large-scale collection, storage, and analysis of this data, allowing organisations to detect patterns, generate insights, and optimise decision-making processes.

In the 2010s, machine learning was one of the new tools that made the news in the data industry, as it enables outcomes to be predicted through algorithms that learn to anticipate from existing data without being explicitly programmed.

More recently, generative AI has expanded these capabilities by not only making predictions but also creating entirely new content—such as text, images, and music—based on learned data patterns.

Data Everywhere: Opportunities and Challenges in the AI Era

Over the last decade, data technology has evolved greatly. Improvements are happening faster than ever before, and it is becoming increasingly important for users to adopt new technologies. This raises multiple questions regarding the management and impact of data, as well as ethical questions.

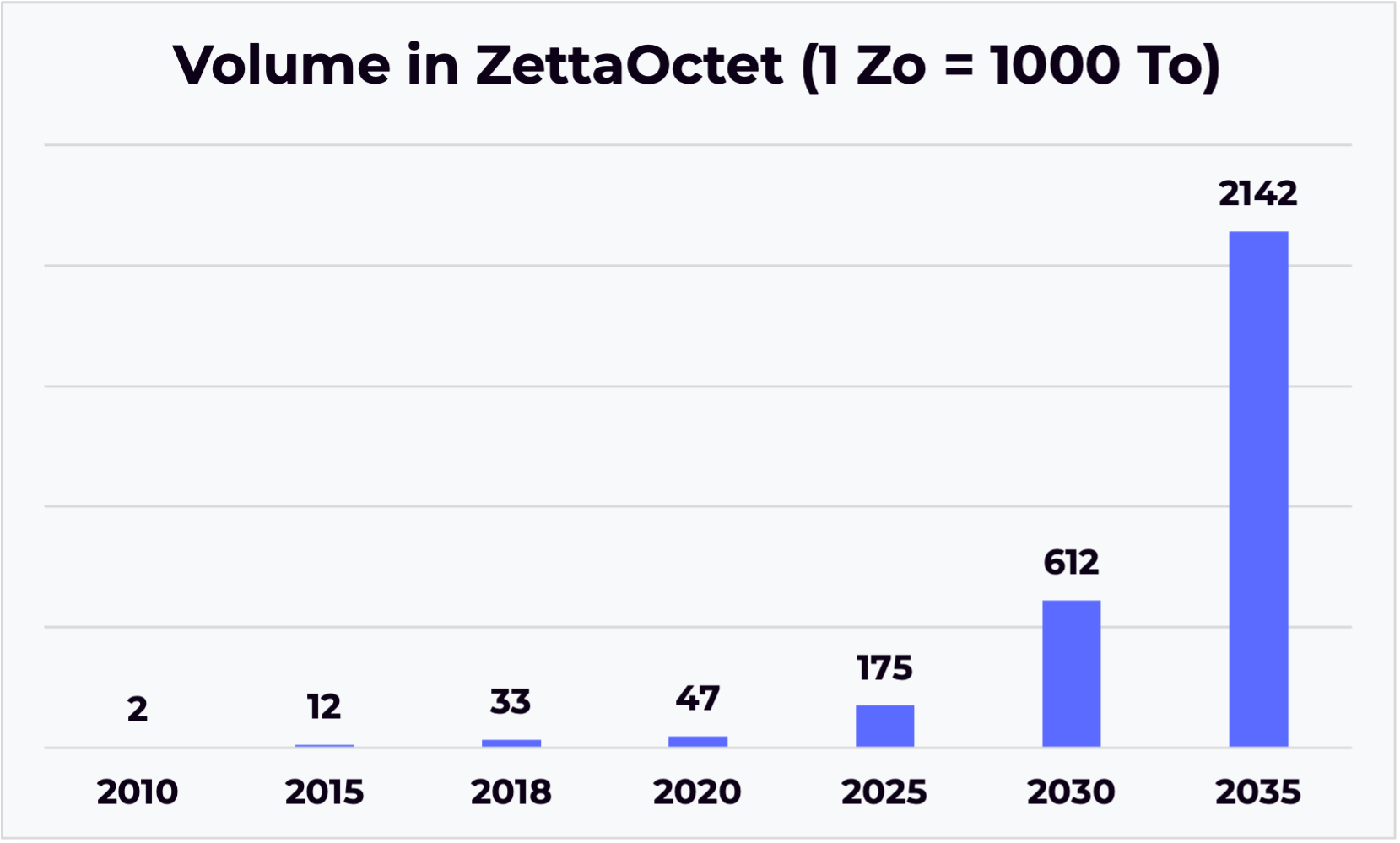

Firstly, data is now everywhere: in our social interactions with the social networks, in our jobs as every employee work in a more or less close manner with data. This cause a surge a massive increase of data over the last 15 years as it is shown in the following graph from statista.com:

The graph illustrates the rapid increase in global data usage since 2010. However, data technology remains relatively new for many business leaders and employees, and numerous organisations are only beginning to adopt and integrate data governance frameworks into their everyday work processes. This gap between technological growth and organisational maturity highlights the challenges associated with turning data availability into effective operational value.

From an individual perspective, the widespread use of data has led businesses to collect detailed information about users’ online behaviour, often at the expense of personal privacy. Users are increasingly required to share personal information, while companies must invest heavily in securing their infrastructures to protect sensitive data and comply with evolving regulatory requirements.

Since the AI boom began in 2022, concerns about data privacy have intensified alongside new strategic data challenges. Artificial intelligence systems depend on large volumes of high-quality data to function effectively, forcing organisations to rethink how data is collected, processed, and governed. Businesses must establish robust data pipelines capable of transforming raw data into reliable, trustworthy information suitable for AI-driven applications.

In addition to privacy and governance issues, data storage entails significant financial and environmental costs. Storing and processing data requires vast amounts of energy, particularly in large-scale data centres. In the United States, data centres consumed approximately 183 TWh of electricity in 2024—around 4 % of total national electricity consumption—according to the Pew Research Center. This growing energy demand raises important questions about sustainability in an increasingly data-driven economy.

To conclude, as we enter 2026, technologies for creating and processing data are highly developed. The focus is no longer solely on data collection, but increasingly on adding value through analysis, interpretation, and support for decision-making processes. The development of agentic AI—systems capable of autonomously making decisions and executing actions—illustrates this shift. This evolution marks a new stage in the history of data technology.